Introduction: Next Generation Research Computing

Research computing within the arts and humanities has evolved in tandem with rapidly advancing digital methodologies, nuanced datasets, and increasingly robust web programming environments. More than ever, scholars are engaging with shared, scalable research ecosystems that often include content annotation, text/network analysis, data visualization, and crowdsourcing functionalities, among others.

Despite these major shifts within the digital research landscape, commonly adopted databases for content storage and retrieval do not always prioritize the needs of an increasingly sophisticated user base, nor have they been optimized for the immediacy and scalability that modern research applications demand.

The following document surveys modern databases and theories of data modeling in order to compare and contrast differing approaches to database-driven digital research. All examples within this overview represent a selection of projects designed by Harvard University faculty in collaboration with Arts & Humanities Research Computing. Each makes use of varying database technologies, both common and cutting-edge, and was designed with long-term, flexible, and sustainable research applications in mind.

Relational Databases as a Point of Departure

Relational databases are the most commonly used database technology. They were originally developed to keep track of large numbers of interrelated people, objects, and processes, such as patients in a hospital, students at a university, or books that Amazon sells. The software has become so standard and cost effective that relational databases have become the default technology, even when they may not be the best tool for the job.

There are proprietary variations among relational database applications, but generally speaking all share the same fundamental data model: organizing information in one or more tables of rows and columns. Tables contain people, objects, events, etc. of a single type (patients, students, books). Rows describe information pertaining to one instance (single patient, particular student, specific book). Columns contain fields, a type of data that describes aspects of an item within a table (patient name, student campus address, book title). Typically, one of the fields will be a unique identifier that can label a specific row in the table (patient ID, student ID, ISBN).

The most basic example of this is the Excel spreadsheet and its related forms (comma/tab separated values). Relational databases have been in use for decades, and remain the most flexible choice for data storage and retrieval because many are open-source and have large communities of practice. MySQL is perhaps the most popular open source database for digital research projects, blogs, and other web applications.

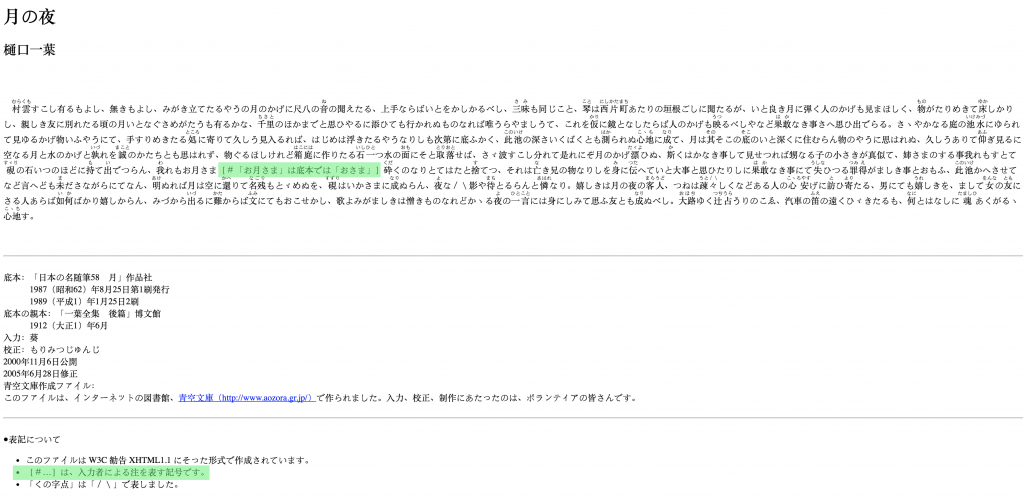

Good & Bad Relations: Design Thinking for an Opera Social Network

In order to illustrate relational database fundamentals, the following example will walk through the process of creating a simple social network of operas and their performances around the world. This demonstration draws on data from Operabase, an online archive containing information about performances, artists, opera companies, and more.

[/vc_column_text][vc_single_image media=”49584″ caption=”yes” media_width_percent=”80″ alignment=”center”][vc_column_text]

Fidelio, the only opera written by Ludwig van Beethoven, tells the story of Leonore, who disguises herself as a prison guard to free her husband Florestan from certain death within a political prison. It narrates a heroic story about liberty and justice, and has multiple performances playing around the world in 2017.

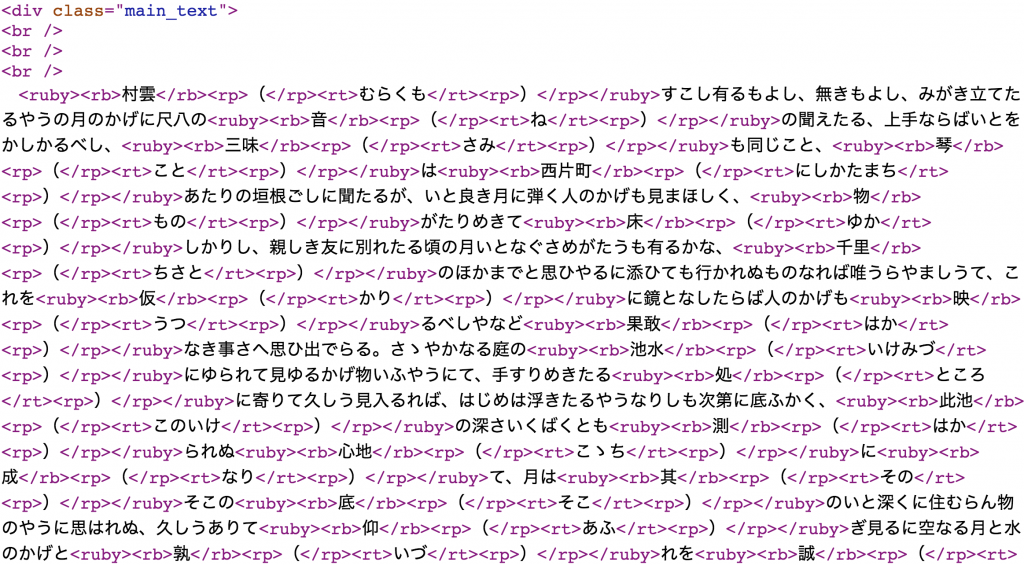

Beethoven’s opera is one of many within the Operabase archives. In a relational database model, these entries would exist within a table called operas. Each row would represent one opera and each column would represent a particular type of data about the opera such as NAME, ID, COMPOSER, FIRST_PERFORMANCE, etc. Operas are only one type of table. Other tables may include artists, opera companies, theaters, and more. Here is an example of what the operas table may look like:

[/vc_column_text][vc_single_image media=”49588″ caption=”yes” media_width_percent=”100″ alignment=”center”][vc_column_text]

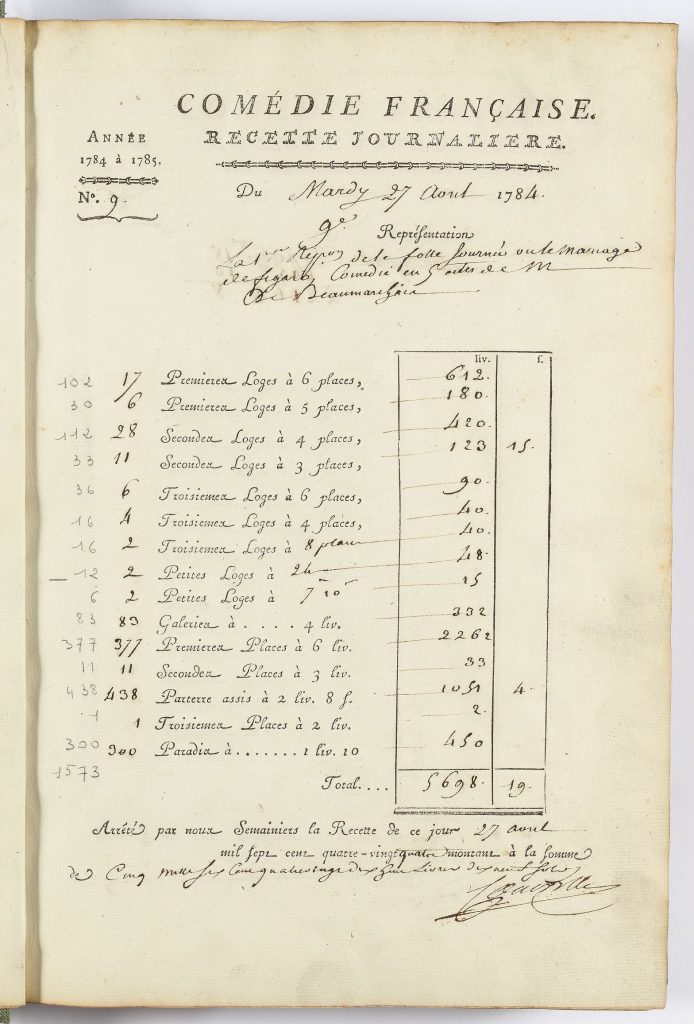

In order to connect performance dates, locations, and other information with each opera, the user must create new tables to represent these additional entities. The first table will be called theaters, and the second table, called performances, will combine information from operas and theaters into a single item, a performance of a particular opera at a specific opera house. First, here is an example of the theaters table:[/vc_column_text][vc_single_image media=”49589″ caption=”yes” media_width_percent=”100″ alignment=”center”][vc_column_text]Each theater has a unique THEATER_ID, NAME, COUNTRY, CITY, CAPACITY, SEASON, and WEBSITE. According to Operabase, each theater above will host a production of Fidelio during the 2017 season. Furthermore, each performance will have its own respective beginning and closing dates.

The performances table will illustrate the fundamental behavior of the relational database model: creating relationships between tables. This new table will store performance begin/end dates, and will also draw from the operas table and the theaters table to create instances of connected, queryable information. Connecting data in this manner is called joining, and data joins can serve to create permanent new tables within a database, or as a way of connecting data in a live instance to be used and then discarded from memory once the query has completed. In this example, the data join will connect information together within one table that will become a permanent part of the underlying database.

[/vc_column_text][vc_single_image media=”49595″ caption=”yes” media_width_percent=”100″ alignment=”center”][vc_column_text]

Within this table structure, PERFORMANCE_BEGIN and PERFORMANCE_END connect to a respective opera and theater, referenced by OPERA_ID and THEATER_ID. In the original operas table, the unique id representing each opera—also called a primary key—is used to refer to itself from within another table. Establishing a primary key within a table allows for the relational database to be able to distinguish between unique entries within the table.

When an item from one table is referenced within another table, the primary key in the original table links to the secondary table in the form of a foreign key. In other words, in the operas table, Fidelio has a primary key (id number) of 1. In the performances table it is necessary to use that same unique id number to instruct the database to pull opera number 1 from the original table; however, within the new table, the primary key of 1 shifts to become a foreign key, which provides instructions to the database that the key originally comes from the operas table, and that is where it can look to find the original values.

This is a basic example of a growing network of operas, theaters, and performances. For a more robust social network, it would be necessary to create separate tables for performers, composers, opera companies, and more. This would dramatically increase the complexities of the database as new relationships would have to be mapped to performance instances. Beyond performances alone, the complexity of the data would also sharply increase if relationships between performers, composers, and other artists were described.

Mapping data to a specific structure within a database is called data modeling, and with relational databases the data model is extremely important for the integrity of the database. One of the most important factors in the use of relational databases is the time spent planning before any data can be entered. This planning must account for potential changes or augmentations to the original data in advance, because increasingly complex datasets can be challenging to append to pre-structured tables. Graph Databases: New Opportunities for Connected Data asserts “whereas relational databases were initially designed to codify paper forms and tabular structures—something they do exceedingly well—they struggle when attempting to model the ad hoc, exceptional relationships that crop up in the real world. Ironically, relational databases deal poorly with relationships” (Robinson, Webber & Eifrem, 11).

For arts and humanities research computing projects, creating meaning amongst data is as important, if not more important, than storing the data in the first place. Scholars increasingly look to database technologies with the express purpose of distant reading their data, finding patterns, and testing queries. Thus, the fundamental goal is always to create and expose relationships among data, and the manner of approaching this problem differs depending on the type of project in question.

The following use cases will demonstrate how newer types of databases, known collectively as NoSQL, can more elegantly accommodate arts and humanities data. This new generation of technologies breaks the dominance of relational tables and fields to allow for a more intuitive and robust environment to explore and ask questions of data.

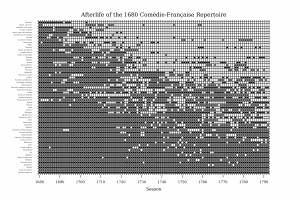

Faculty Case Study: The Giza Project

The Giza Project at Harvard University provides access to “the largest collection of information, media, and research materials ever assembled about the Pyramids and related sites on the Giza Plateau.” The project is led by Peter Der Manuelian, Philip J. King Professor of Egyptology and Director of the Harvard Semitic Museum. Rashmi Singhal of Arts & Humanities Research Computing oversees data architecture and technical development for the project’s website Digital Giza.

Digital Giza is a veritable research environment consisting of highly structured scholarly big data: photos, bibliographies, dates, dig site findings, drawings, documents (published and unpublished), diary entries, ancient people (e.g. identified bodies within a tomb), modern people (e.g. archaeologists, photographers, scholars, etc.), and a host of other archeological information. The goal of the integrated research platform, featuring unique pages for each entity within the database and associated information, is illustrated in the following image of the tomb of Meresankh III:

[/vc_column_text][vc_single_image media=”49694″ caption=”yes” media_width_percent=”100″ alignment=”center”][vc_single_image media=”49695″ caption=”yes” media_width_percent=”100″ alignment=”center”][vc_single_image media=”49696″ caption=”yes” media_width_percent=”100″ alignment=”center”][vc_column_text]

The underlying database that informs Digital Giza is called The Museum System (TMS), a relational database optimized for museums and archival collections. TMS serves the needs of the Giza team, allowing for complex, systematic data entry and manipulation. In addition, the team receives support from Harvard University because TMS is in use elsewhere on campus. There was, however, one major drawback: extremely complex queries against TMS were required in order to build the unique website displays on the Digital Giza web application.

In the above example of the tomb of Meresankh III, there are hundreds of photos, finds, videos, and people associated with the object. A typical query to pull together all of this information involves multiple data joins and easily surpasses one hundred lines of code. In addition, future iterations of the dataset may include new types of objects and relationships not previously included within the data model. This poses a challenge for the efficiency and flexibility of the web environment.

It became clear while working on the project that the cost and time required to switch the Giza team to a completely new workflow and database technology would be too prohibitive, and therefore the solution changed: a NoSQL database called ElasticSearch would sit atop TMS, one that could act as a layer between TMS and the web application.

NoSQL frees up the rigidity of the relational data model by implementing a number of alternative data storage solutions. The term itself—NoSQL—represents a wide variety of database types with differing data models, and for Digital Giza this specifically translated to a document model.

This type of data model creates a unique document for each object within the database. This data format is self-contained, meaning the manner of parsing the data within each document is self-described by that document. This is in direct contrast to relational models where tables have universal, well-defined structures that correspond to data entities. In a document model, no two documents need to have the exact same schema. Below are two examples of documents within the database (in JSON format).

[/vc_column_text][vc_gallery el_id=”gallery-202494″ medias=”49711,49712″ gutter_size=”3″ screen_lg=”1000″ screen_md=”600″ screen_sm=”480″ images_size=”one-one” single_overlay_opacity=”50″ single_padding=”2″][vc_column_text]

In addition, documents are typically encoded using commonly accepted web language standards: XML, JSON, BSON, etc. This makes it easy for developers to incorporate the results of database queries into web applications directly. Document models solve a structural problem created by relational databases; they support a flexible data model that can withstand the demands of a rapidly evolving project with polymorphic data and multiple users logging in from around the world.

In this use case, there is a translation of data from one system (TMS) to another (ElasticSearch). Each database solves a different problem, the former serving as a tool for the storage and long-term upkeep of the data using universally accepted museum standards, and the latter to efficiently populate a user interface for navigating the various archeological data on a research platform built for the web.

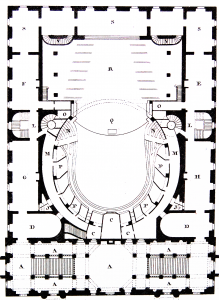

Faculty Case Study: Russian Modules

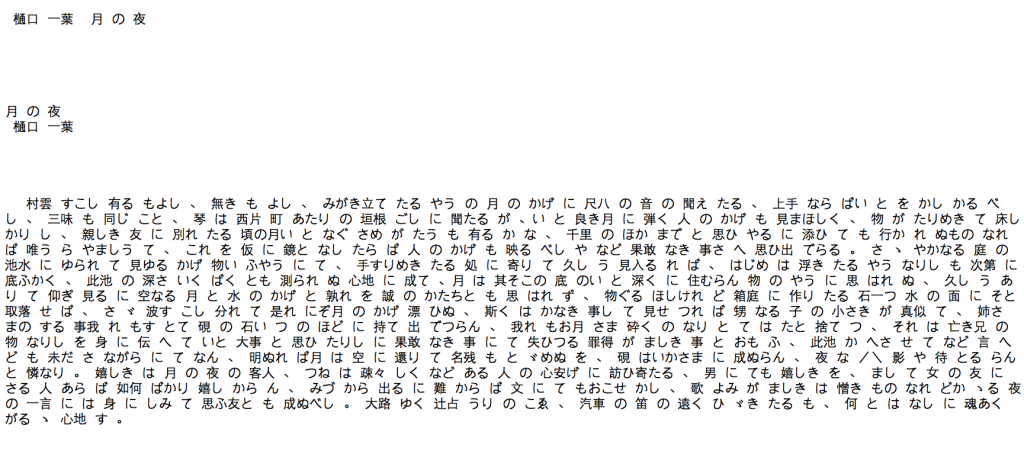

Russian Modules, led by Steven Clancy, Senior Lecturer in Slavic Languages and Literatures and Director of the Slavic Language Program, is a Russian linguistics application designed to support curriculum building, language learning, and related research on the structure of the Russian language. Christopher Morse of Arts & Humanities Research Computing oversees application development, and the project uses a NoSQL graph database called Neo4j.

In its current iteration, users can type Russian text into a form that will automatically parse the information into a variety of categories. These categories range from part of speech, to word inflection, to clusters of common meaning, also called domains. In addition, the tool provides word difficulty levels based on the Russian language curriculum within the Slavic Department in order to gauge whether or not a particular text is too challenging (or not challenging enough) for students to undertake.[/vc_column_text][vc_single_image media=”49668″ caption=”yes” media_width_percent=”100″ alignment=”center”][vc_column_text]Data modeling within a graph database environment is quite different than within a tabular structure. In fact, graph databases do not have a set data modeling style because they were designed to emphasize the relationships between data more than the data itself. They are typically used to model social networks, families, organizations, epidemiological contagion paths, and other interconnected ideas.

The graph structure is straightforward: data must take the form of a node, relationship, or property. The resulting structure is reminiscent of a mind map, a series of circles connected by lines that represent some kind of relationship. Each circle within a graph database is referred to as a node or vertex, and each line that connects two nodes is referred to as an link or edge.

Here is an example view of the word “book” in the Russian Modules database:

[/vc_column_text][vc_custom_heading heading_semantic=”h4″ text_size=”h4″]Lemma Search: книга (book)[/vc_custom_heading][vc_raw_html]JTNDZGl2JTIwaWQlM0QlMjJydV9ib29rJTIyJTNFJTBBJTNDcHJlJTNFTUFUQ0glMjAlMjhsJTNBTGVtbWElMjktJTVCciUzQUlORkxFQ1RTX1RPJTVELSUzRSUyOGYlM0FGb3JtJTI5JTIwV0hFUkUlMjBsLmxhYmVsJTIwJTNEJTIwJTI3JUQwJUJBJUQwJUJEJUQwJUI4JUQwJUIzJUQwJUIwJTI3JTIwUkVUVVJOJTIwbCUyQ3IlMkNmJTNCJTNDJTJGcHJlJTNFJTBBJTNDJTJGZGl2JTNF[/vc_raw_html][vc_column_text]

Within the Russian Modules database, all word forms are nodes. Dictionary forms of the word are labeled lemmas, and grammatical inflections of the lemma are labeled forms (e.g. lemma: книга; form: книги [genitive, ‘of the book’]). Encoded within the relationship are additional properties such as what part of speech a word is inflecting to, or what difficulty level the word has. In the above interactive example, the central word is the lemma, and the connections fanning out from the center each represent different forms of that lemma. The relationship between the lemma and its forms also has a unique name: INFLECTS_TO. This name exhibits the relationship between the nodes very clearly.

The querying language used to interface with Neo4j, Cypher, encourages modeling data in plain English. This philosophy echoes the distant yet perennial wisdom of Abelson and Sussman who wrote in their preface to Structure and Interpretation of Computer Programs that “programs must be written for people to read, and only incidentally for machines to execute.” The Neo4j back end includes built-in graphical functionality powered by D3js, and the Cypher query language allows users to intuitively work with their data. The above example can be simplified into plain English without much of a jump:

[/vc_column_text][vc_raw_html]JTNDcHJlJTNFTUFUQ0glMjAlMjhsJTNBTGVtbWElMjktJTVCciUzQUlORkxFQ1RTX1RPJTVELSUzRSUyOGYlM0FGb3JtJTI5JTIwV0hFUkUlMjBsLmxhYmVsJTIwJTNEJTIwJTI3JUQwJUJBJUQwJUJEJUQwJUI4JUQwJUIzJUQwJUIwJTI3JTIwUkVUVVJOJTIwbCUyQ3IlMkNmJTNCJTNDJTJGcHJlJTNF[/vc_raw_html][vc_column_text]

The query searches for all lemmas that match the label “книга”, and then searches for all associated relationships. The relationship is called INFLECTS_TO, but also has a directional component. (Lemma)-[INFLECTS_TO]->(Form) has “–>” inside of it, a graphical way of describing the relationship.

This free data model allows users to create and remove nodes and connections on the fly with very little code. Traversing the various relationships across the graph is also simplified in comparison to extremely complex relational databases with multiple intermediary tables that connect data. Take for example the following query that searches for the word sister, all of its inflected forms, the domain of words it belongs to, and the other words that also make up that domain:

[/vc_column_text][vc_raw_html]JTNDcHJlJTNFTUFUQ0glMjAlMjhsJTNBTGVtbWElMjAlN0JsYWJlbCUzQSUyNyVEMSU4MSVEMCVCNSVEMSU4MSVEMSU4MiVEMSU4MCVEMCVCMCUyNyU3RCUyOS0lNUJyJTNBSU5GTEVDVFNfVE8lNUQtJTNFJTI4ZiUzQUZvcm0lMjklMkMlMjAlMjhsJTI5LSU1QnIxJTNBSEFTX0RPTUFJTiU1RC0lM0UlMjhkJTI5JTNDLSU1QnIyJTNBSEFTX0RPTUFJTiU1RC0lMjhvJTI5JTBBUkVUVVJOJTIwbCUyQ3IlMkNmJTJDcjElMkNyMiUyQ28lM0MlMkZwcmUlM0U=[/vc_raw_html][vc_single_image media=”49625″ caption=”yes” media_width_percent=”100″ alignment=”center”][vc_column_text]

Future iterations of the project endeavor to include more robust natural language processing functionality, interactive word visualizations, and the integration of the database into an eBook for Russian language study.

[/vc_column_text][vc_raw_js]JTNDc2NyaXB0JTIwc3JjJTNEJTIyaHR0cCUzQSUyRiUyRmQzanMub3JnJTJGZDMudjMubWluLmpzJTIyJTNFJTNDJTJGc2NyaXB0JTNFJTBBJTNDc2NyaXB0JTNFJTBBJTBBdmFyJTIwaGVpZ2h0JTIwJTNEJTIwNTAwJTNCJTBBdmFyJTIwd2lkdGglMjAlM0QlMjAxMjAwJTIwJTJBJTIwLjY2JTNCJTBBJTBBdmFyJTIwZm9yY2UlMjAlM0QlMjBkMy5sYXlvdXQuZm9yY2UlMjglMjklMEElMjAlMjAlMjAlMjAuc2l6ZSUyOCU1QndpZHRoJTJDJTIwaGVpZ2h0JTVEJTI5JTBBJTIwJTIwJTIwJTIwLmNoYXJnZSUyOC00MDAlMjklMEElMjAlMjAlMjAlMjAubGlua0Rpc3RhbmNlJTI4MTAwJTI5JTBBJTIwJTIwJTIwJTIwLm9uJTI4JTIydGljayUyMiUyQyUyMHRpY2slMjklM0IlMEElMEF2YXIlMjBkcmFnJTIwJTNEJTIwZm9yY2UuZHJhZyUyOCUyOSUwQSUyMCUyMCUyMCUyMC5vbiUyOCUyMmRyYWdzdGFydCUyMiUyQyUyMGRyYWdzdGFydCUyOSUzQiUwQSUwQXZhciUyMHN2ZyUyMCUzRCUyMGQzLnNlbGVjdCUyOCUyMiUyM3J1X2Jvb2slMjIlMjkuYXBwZW5kJTI4JTIyc3ZnJTIyJTI5JTBBJTIwJTIwJTIwJTIwLmF0dHIlMjglMjJ3aWR0aCUyMiUyQyUyMHdpZHRoJTI5JTBBJTIwJTIwJTIwJTIwLmF0dHIlMjglMjJoZWlnaHQlMjIlMkMlMjBoZWlnaHQlMjklM0IlMEElMEF2YXIlMjBsaW5rJTIwJTNEJTIwc3ZnLnNlbGVjdEFsbCUyOCUyMi5saW5rJTIyJTI5JTJDJTBBJTIwJTIwJTIwJTIwbm9kZSUyMCUzRCUyMHN2Zy5zZWxlY3RBbGwlMjglMjIubm9kZSUyMiUyOSUzQiUwQSUwQWQzLmpzb24lMjglMjJodHRwJTNBJTJGJTJGZGFydGhjcmltc29uLm9yZyUyRmFydHNodW1yYyUyRmRhdGFiYXNlczIuMCUyRnJ1X2Jvb2suanNvbiUyMiUyQyUyMGZ1bmN0aW9uJTI4ZXJyb3IlMkMlMjBncmFwaCUyOSUyMCU3QiUwQSUyMCUyMGlmJTIwJTI4ZXJyb3IlMjklMjB0aHJvdyUyMGVycm9yJTNCJTBBJTBBJTIwJTIwZm9yY2UlMEElMjAlMjAlMjAlMjAlMjAlMjAubm9kZXMlMjhncmFwaC5ub2RlcyUyOSUwQSUyMCUyMCUyMCUyMCUyMCUyMC5saW5rcyUyOGdyYXBoLmxpbmtzJTI5JTBBJTIwJTIwJTIwJTIwJTIwJTIwLnN0YXJ0JTI4JTI5JTNCJTBBJTBBJTIwJTIwbGluayUyMCUzRCUyMGxpbmsuZGF0YSUyOGdyYXBoLmxpbmtzJTI5JTBBJTIwJTIwJTIwJTIwLmVudGVyJTI4JTI5LmFwcGVuZCUyOCUyMmxpbmUlMjIlMjklMEElMjAlMjAlMjAlMjAlMjAlMjAuYXR0ciUyOCUyMmNsYXNzJTIyJTJDJTIwJTIybGluayUyMiUyOSUzQiUwQSUwQSUyMCUyMG5vZGUlMjAlM0QlMjBub2RlLmRhdGElMjhncmFwaC5ub2RlcyUyOSUwQSUyMCUyMCUyMCUyMC5lbnRlciUyOCUyOS5hcHBlbmQlMjglMjJnJTIyJTI5JTBBJTIwJTIwJTIwJTIwJTIwJTIwLmF0dHIlMjglMjJjbGFzcyUyMiUyQyUyMCUyMm5vZGUlMjIlMjklMEElMjAlMjAlMjAlMjAlMjAlMjAub24lMjglMjJkYmxjbGljayUyMiUyQyUyMGRibGNsaWNrJTI5JTBBJTIwJTIwJTIwJTIwJTIwJTIwLmNhbGwlMjhkcmFnJTI5JTNCJTBBJTBBJTIwJTIwbm9kZS5hcHBlbmQlMjglMjJjaXJjbGUlMjIlMjklMEElMjAlMjAlMjAlMjAlMjAlMjAuYXR0ciUyOCUyMnIlMjIlMkMlMjAxMiUyOSUzQiUwQSUyMCUyMG5vZGUuYXBwZW5kJTI4JTIydGV4dCUyMiUyOSUwQSUyMCUyMCUyMCUyMCUyMCUyMC5hdHRyJTI4JTIyZHglMjIlMkMlMjAxMiUyOSUwQSUyMCUyMCUyMCUyMCUyMCUyMC5hdHRyJTI4JTIyZHklMjIlMkMlMjAlMjIuMzVlbSUyMiUyOSUwQSUyMCUyMCUyMCUyMCUyMCUyMC5hdHRyJTI4JTIyZm9udC1zaXplJTIyJTJDJTIwMTQlMjklMEElMjAlMjAlMjAlMjAlMjAlMjAuYXR0ciUyOCUyMmZvbnQtZmFtaWx5JTIyJTJDJTIwJTIyc2Fucy1zZXJpZiUyMiUyOSUwQSUyMCUyMCUyMCUyMCUyMCUyMC5hdHRyJTI4JTIyc3Ryb2tlJTIyJTJDJTIwJTIyMHB4JTIyJTI5JTBBJTIwJTIwJTIwJTIwJTIwJTIwLnRleHQlMjhmdW5jdGlvbiUyOGQlMjklMjAlN0IlMjByZXR1cm4lMjBkLmxhYmVsJTIwJTdEJTI5JTNCJTBBJTBBJTBBJTdEJTI5JTNCJTBBJTBBZnVuY3Rpb24lMjB0aWNrJTI4JTI5JTIwJTdCJTBBJTIwJTIwbGluay5hdHRyJTI4JTIyeDElMjIlMkMlMjBmdW5jdGlvbiUyOGQlMjklMjAlN0IlMjByZXR1cm4lMjBkLnNvdXJjZS54JTNCJTIwJTdEJTI5JTBBJTIwJTIwJTIwJTIwJTIwJTIwLmF0dHIlMjglMjJ5MSUyMiUyQyUyMGZ1bmN0aW9uJTI4ZCUyOSUyMCU3QiUyMHJldHVybiUyMGQuc291cmNlLnklM0IlMjAlN0QlMjklMEElMjAlMjAlMjAlMjAlMjAlMjAuYXR0ciUyOCUyMngyJTIyJTJDJTIwZnVuY3Rpb24lMjhkJTI5JTIwJTdCJTIwcmV0dXJuJTIwZC50YXJnZXQueCUzQiUyMCU3RCUyOSUwQSUyMCUyMCUyMCUyMCUyMCUyMC5hdHRyJTI4JTIyeTIlMjIlMkMlMjBmdW5jdGlvbiUyOGQlMjklMjAlN0IlMjByZXR1cm4lMjBkLnRhcmdldC55JTNCJTIwJTdEJTI5JTNCJTBBJTIwJTIwbm9kZS5hdHRyJTI4JTIydHJhbnNmb3JtJTIyJTJDJTIwZnVuY3Rpb24lMjhkJTI5JTIwJTdCJTIwcmV0dXJuJTIwJTIydHJhbnNsYXRlJTI4JTIyJTIwJTJCJTIwZC54JTIwJTJCJTIwJTIyJTJDJTIyJTIwJTJCJTIwZC55JTIwJTJCJTIwJTIyJTI5JTIyJTNCJTIwJTdEJTI5JTNCJTBBJTBBJTBBJTdEJTBBJTBBZnVuY3Rpb24lMjBkYmxjbGljayUyOGQlMjklMjAlN0IlMEElMjAlMjBkMy5zZWxlY3QlMjh0aGlzJTI5LmNsYXNzZWQlMjglMjJmaXhlZCUyMiUyQyUyMGQuZml4ZWQlMjAlM0QlMjBmYWxzZSUyOSUzQiUwQSU3RCUwQSUwQWZ1bmN0aW9uJTIwZHJhZ3N0YXJ0JTI4ZCUyOSUyMCU3QiUwQSUyMCUyMGQzLnNlbGVjdCUyOHRoaXMlMjkuY2xhc3NlZCUyOCUyMmZpeGVkJTIyJTJDJTIwZC5maXhlZCUyMCUzRCUyMHRydWUlMjklM0IlMEElN0QlMEElMEElM0MlMkZzY3JpcHQlM0U=[/vc_raw_js][/vc_column][/vc_row]

Conclusion: Looking Forward

The technologies discussed herein comprise a limited selection of database options, each of which varies in complexity, cost, and community size. At Harvard, successful iterations of digital humanities projects making use of both SQL and NoSQL databases have contributed to local communities of practice that continuously push the envelope to explore and describe modern (and emerging) practices for architecting data.

Looking forward, researchers and scholars should always expect at least a minimal learning curve with any new technology. In the end, however, they should not be completely overwhelmed by their digital tools, otherwise the technology has failed to serve its intended purpose. That being said, new does not always mean better, and sometimes the traditional model or approach is the way to go. And finally, sustainability and the projected lifespan of a project are important considerations for any research application built for the web. Be aware of the required upkeep (yearly hosting costs, software upgrades, deprecations, general maintenance, etc.), and use caution with any technology that lacks compatibility with accepted open standards.